TEXT 2 FORM 3D

Enhancing structural form-finding through a text-based AI engine coupled with computational graphic statics

In collaboration with Zifeng GUO, Pierluigi D’ACUNTO

,

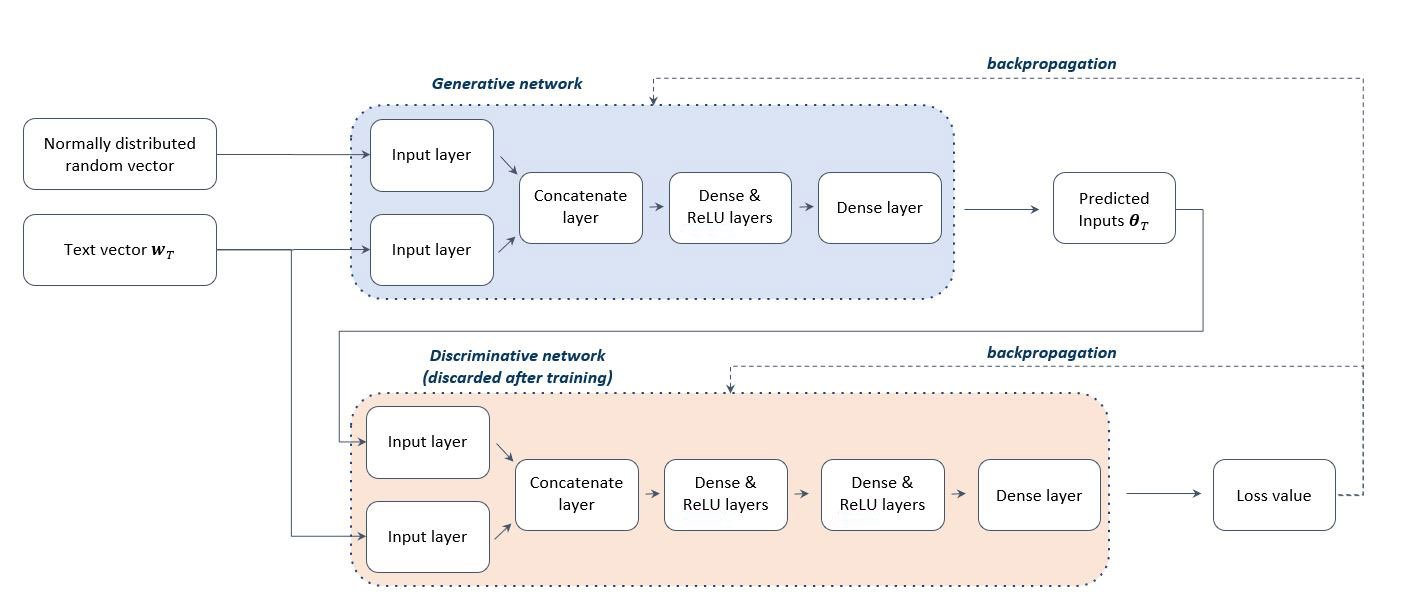

This article introduces Text2Form3D, a machine-learning-based design framework to explore the embedded descriptive representation of structural forms. Text2Form3D relies on a deep neural network algorithm that joins word embeddings, a natural language processing (NLP) technique, with the Combinatorial Equilibrium Modeling (CEM), a form-finding method based on graphic statics. Text2Form3D is trained with a dataset containing structural design options generated via the CEM and labeled with vocabularies acquired from architectural and structural competition reports. For the labeling process, an unsupervised clustering algorithm Self Organizing Maps (SOM) is used to cluster the machine-generated design options by quantitative criteria. The clusters are then labeled by designers using descriptive text. After training, Text2From3D can autonomously generate new structural solutions in static equilibrium from a user-defined descriptive query. The generated structural solutions can be further evaluated by various quantitative and qualitative criteria to constrain the design space towards a solution that fits the designer's preferences.

This article focuses on structural design – a discipline at the interface between architecture and structural engineering – as a case study to test the use of AI to assist the human designer within the design process. The present study builds upon the interaction between human and artificial intelligence, with the machine being able to process semantic requests related to structural forms that go beyond the sole quantitative aspects of structural engineering. The study proposes a shift from a conventional, deterministic form-finding process (i.e., generation of forms that are structurally optimized for given boundary conditions) to an open, creative process in which text inputs from the designer are translated to spatial structures in static equilibrium.

The main outcome of this study is the development of Text2Form3D, a machine-learning-based design framework to explore the semantic representation of structural design forms. Text2Form3D combines a Natural Language Processing (NLP) algorithm , which translates text into numerical features, with the Combinatorial Equilibrium Modeling (CEM) form-finding algorithm , which generates structural forms from topological and metric inputs. This connection between NLP and CEM allows Text2Form3D to suggest possible design parameters based on the input texts specified by the designer, thus allowing the designer to explore the design space of the CEM method semantically.

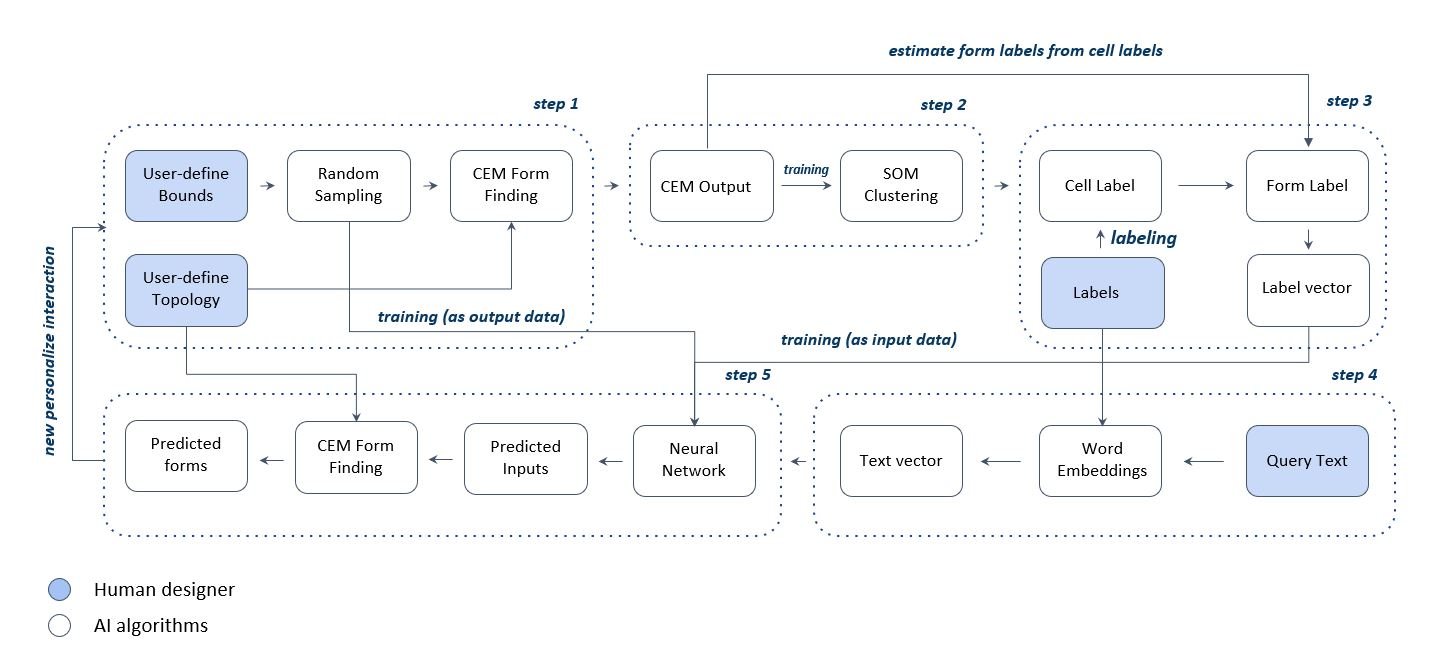

STEP 1 CEM algorithm - generating a dataset of various structural forms with randomly initialized design parameters.

STEP 2 Self-Organizing Maps - clustering generated forms.

STEP 3 Trained SOM - labeling several thousands of design options using vocabularies acquired from architectural and structural practice competition reports.

STEP 4 Word embedding - processing the text labels.

STEP 5 DNN - training neural network by using the text vectors as the input and the design parameters of CEM as the output; then, generating the CEM input parameters based on new embedding vectors produced from any input texts.

The above design workflow was tested in a design experiment to generate towers.

The generation of the structural forms through the CEM relied on a fixed topology, i.e., all the generated forms have the same number of nodes and edges.

Based on this setup, a dataset of 87,728 towers was created. The above figure shows the topology diagrams (i.e., node-edge relations) and the form diagrams (i.e., the generated structural forms) of two different combinations of input metric parameters.

To label the generated forms one by one using texts would take an enormous amount of human labor. Therefore, the experiment relied on a clustering labeling strategy to save time and improve the labeling consistency. The assumption is that similar text labels can represent forms within the same cluster.

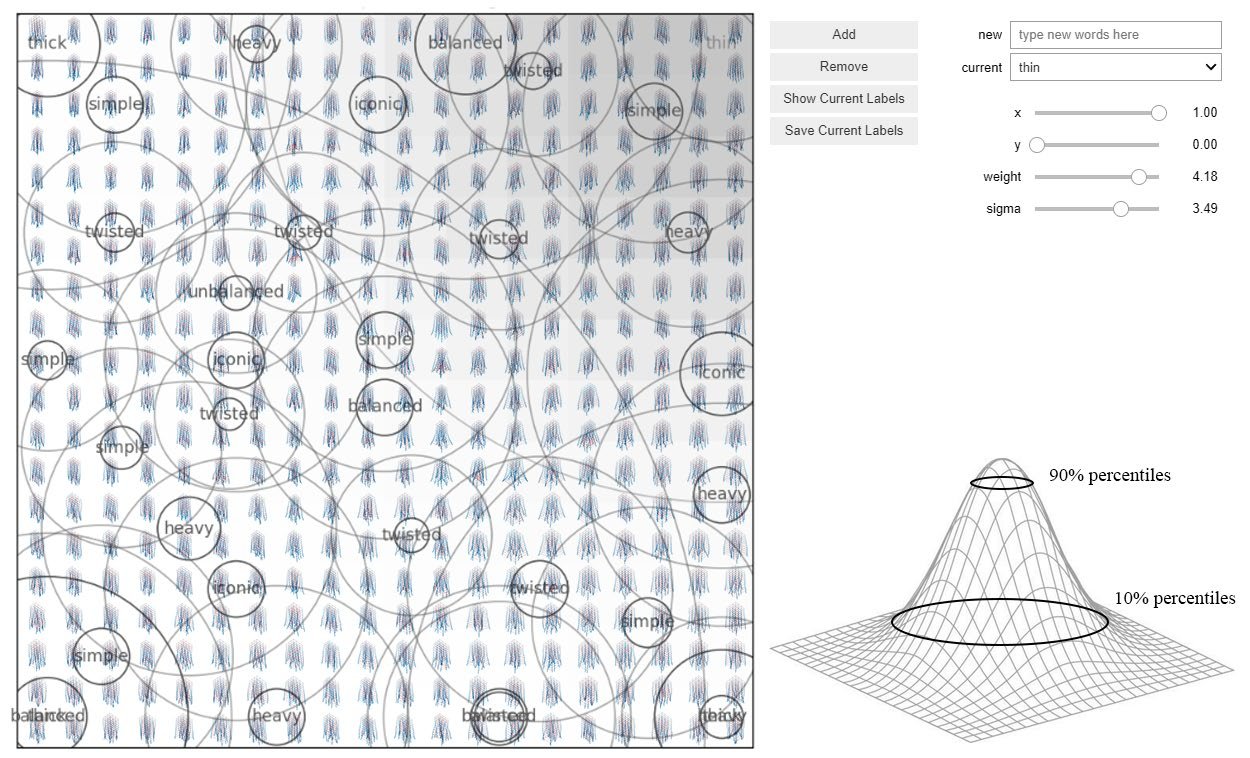

A SOM was trained to cluster the generated forms to support the labeling process based on the geometric characteristic of the forms. The above figure shows the trained SOM, consisting of a grid of 20×20 cells.

To support the labeling process, several common words used to describe architectural projects were identified by crawling jury commentaries for winning projects in architectural competitions. The crawling process specifically focused on architectural projects that included the keyword "tower" to match the database for this experiment, resulting in more than 200 commentaries. These commentaries were pre-processed, leading to 105 commonly used adjectives. These adjectives were finally manually filtered to erase redundancy, resulting in 68 meaningful adjectives from which the text labels used in this experiment were created.

The above figure shows the user interface of the text-based labeling process, where each adjective is associated with a Gaussian distribution shown by the circles representing the 10% and 90% percentiles.

The generated labels represent the association values between structure forms and the selected adjectives. Therefore, the text label of each structural form can be represented as a vector that consists of the association values to all adjectives.

As the selection of adjectives varies and solely depends on the designer's personal preferences, we consider the adjectives as the anchor points that convert input texts to association values that are comparable to the generated labels. We used word embedding for this process so that Text2Form3D could also process arbitrary words that are not included in the adjectives labeled by the designer. Then, we use the artificial neural network Text2Form3D to regenerate structural forms by predicting the CEM parameters (i.e., the design inputs) from the text labels.

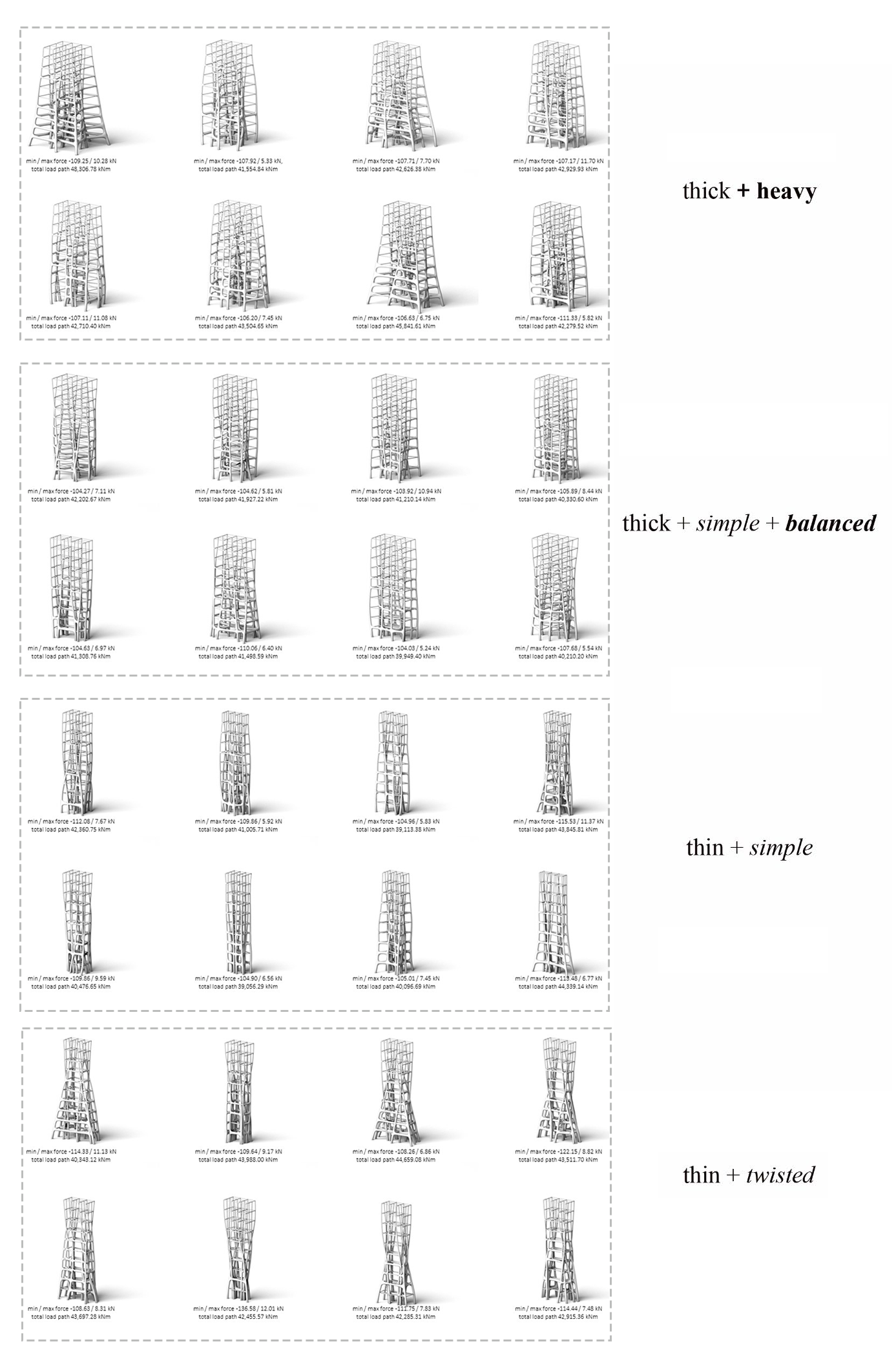

There are four groups of 3D structural forms generated using different input adjectives following the proposed pipeline, like the below figure shows. Each group clearly shows the geometric characteristics that can be differentiated from other groups, which means that Test2Form3D successfully captured the semantic information of the input adjectives and encoded them to the output geometries.

The proposed workflow demonstrates the effectiveness of the interplay between human designers and AI in designing structural forms through descriptive text and quantitative parameters as inputs.

The presented study focused on exploring and generating 3D structural forms through a text-based AI engine coupled with computational graphic statics. The project proposes a workflow in which the structural forms are generated based on static equilibrium and the designer's semantic interpretation of 3D geometries. Such workflow emphasizes the interplay between designers and AI where the generative algorithm can adapt to the personal preferences of designers and act as a suggestion engine for the semantic interpretation of structural forms. This approach shows the potential of AI not only in solving well-defined problems but also in tackling those weakly-defined tasks that are inherited from the creative nature of design. Finally, an alternative understanding of AI’s role within the design process is presented, by proposing a shift from the conventional input-to-output process (i.e., image to image or text to image) to a translation of text into spatial geometries (i.e., text to 3D structural forms).

Gallery